Your Local Police Department May Have Used Clearview AI to Surveil You

- At least one out of five public entities in the United States have used Clearview AI facial recognition products.

- BuzzFeed News just asked everyone about it, but most decided not to respond or comment.

- In many cases, we see Clearview AI pushing trial versions of the product to Police Departments.

Undoubtedly, the deployment of Clearview AI’s facial recognition systems is widespread in the United States, but where exactly such systems are being used, for what purposes, and on what legal basis remains blurry. A recent post on BuzzFeed News offers some insight on the use of Clearview AI solutions by local police departments that pretend not to remember whether they used a powerful facial recognition tool or not.

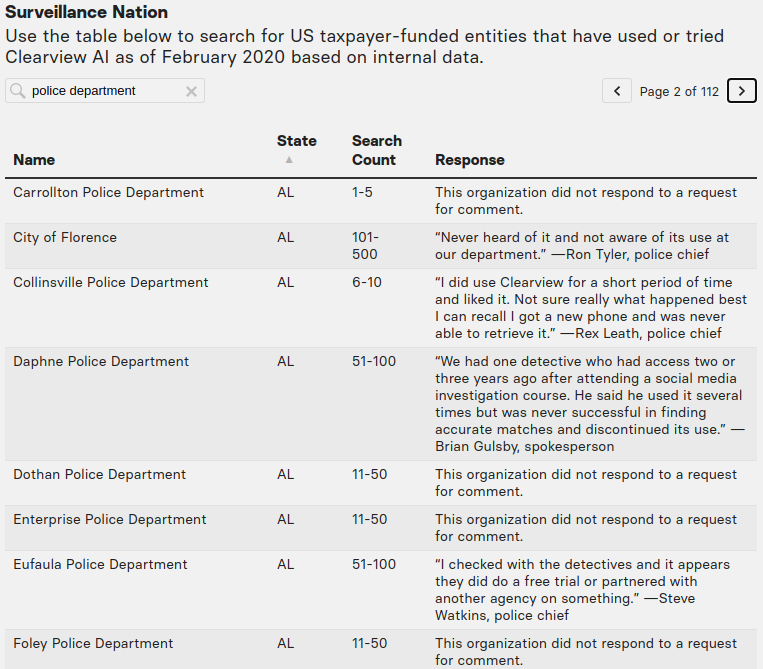

The publication decided to just ask every US-based taxpayer-funded entity, including tribal, local, and also state police departments, publicly funded university law enforcement bodies, district attorneys’ offices, and federal agencies - such as the Air Force and Immigration and Customs Enforcement. With what responses they got, they created a database where people can search and get results about the plausibility of surveillance.

Out of the 1,803 entities that populate the database, 335 admitted using Clearview AI, 97 declined to comment, and 1,161 organizations didn’t even bother to respond. This tells us that at least 18.6% have used Clearview AI’s facial recognition tools, which is already a respectable percentage by itself.

If you want to check your own state or local town, you’ll have to use BuzzFeed’s database tool as the list is too extensive to include here. We have tested out several queries and found some notable deployment examples. The Daphne Police Department in Alabama admits that one detective used the system several times over a period of up to three years. The Eufala Police Department also responded by saying that some detectives did a free trial in connection with another agency.

Even in the privacy-minded California where stricter laws apply, the Department of Health Care Services identified one employee using a two-week trial of Clearview’s product, while the Long Beach Police has also used it for about a month. Similar use cases were reported by the Northern California Computer Crimes Task Force and also the University of California San Diego Police Department.

Again, the above are just some examples picked as indicative or even characteristic, but the privacy-invading tools' deployment doesn’t stop there. As we discussed before, it’s not even only about Clearview AI. Private entities in the United States have a galore of facial recognition system choices coming from various vendors. This report, though, focuses on public entities, making the violations a lot harder to accept.