Microsoft Admits that Cortana is Sending Recordings to Reviewers

- Microsoft Cortana is recording what you say and sending it to human reviewers.

- Microsoft claims they only do this with users who have provided their permission.

- The company claims they need the recordings to help their AI deliver better translations.

As it seems, all smart assistants made by tech behemoths are eavesdropping us, record what we say without being summoned by the user, and then send the recordings to human reviewers who help make the AI better in understanding what we say. Last month, we tapped onto the revelations about Google Assistant silently recording what we say and then sending these audio snippets to Google’s contractors. Two weeks after that, it was time for Apple to give explanations when Siri was caught doing the exact same thing, with the added cringe of reviewers saying that it is easy to correlate the recordings to the iPhone’s or Watch owner’s identity. And finally, the time for Microsoft to admit they’re doing it too has come, so they have released a detailed privacy statement to explain why we shouldn’t worry about it.

Allegedly, Microsoft Cortana is listening to conversations for the purpose of getting better in voice translation, something that is currently offered by Skype and Cortana directly. This, however, is only happening after Microsoft has obtained the user’s permission to do so. The reviewers of the audio recordings are also contractors and not employees of the tech titan, an element that introduces the same unwanted riskiness and uncertainty that we have with Google and Apple. As Microsoft points out in their statement:

“Microsoft collects voice data to provide and improve voice-enabled services like search, voice commands, dictation, or translation services. We strive to be transparent about our collection and use of voice data to ensure customers can make informed choices about when and how their voice data is used. Microsoft gets customers' permission before collecting and using their voice data. We also put in place several procedures designed to prioritize users' privacy before sharing this data with our vendors, including de-identifying data, requiring non-disclosure agreements with vendors and their employees, and requiring that vendors meet the high privacy standards set out in European law. We continue to review the way we handle voice data to ensure we make options as clear as possible to customers and provide strong privacy protections.”

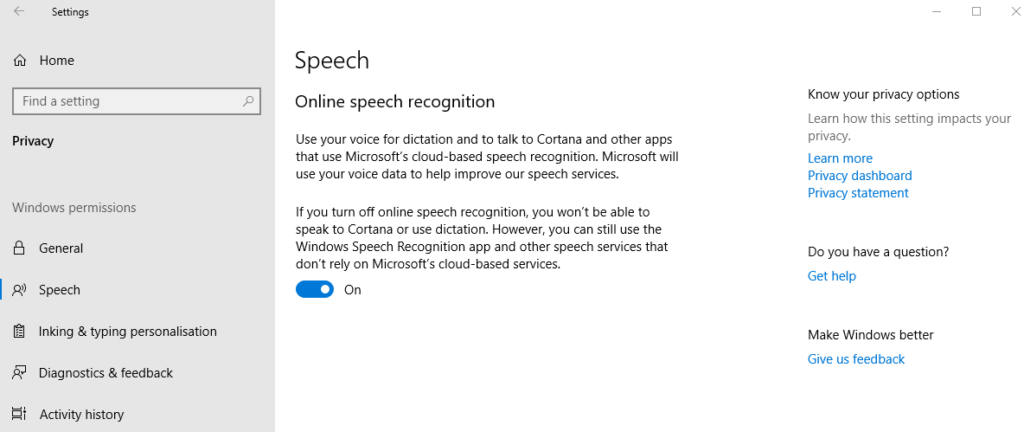

Of course, Microsoft claims that their affiliates and contractors who are doing the reviews have signed strict privacy protection agreements, so confidentiality is not at stake. This is exactly what Apple said about their own contractors, but still, it doesn’t sound very reassuring to people. This is why Microsoft gives clear instructions on how to opt-out of their recording and analysis program if you don’t want to be a part of it anymore. You may do so through Cortana’s Permission settings, or you can clear your data by visiting your account’s privacy dashboard. Notice the part that says “helping improve Microsoft products and services” in the following screenshot that depicts Cortana Permissions/Speech Privacy settings. They don’t clarify that this entails the listening of your recordings by actual humans, do they?

Now, Apple told the public that they would suspend their “Siri Grading” program immediately following the public backlash. Google has also received a notice from the privacy watchdog in Germany who is about to launch an investigation on their Google Assistant eavesdropping, so they announced that they are stopping their program too, at least in Europe. Microsoft has not made a similar statement, for now, so beware of your Cortana settings and your interactions with the AI.

Are you comfortable in using AI-based assistants that send your recordings to human reviewers? Let us know in the comments down below, or share your thoughts on our socials, on Facebook and Twitter.