Fake Gen AI Tools Distribute Malware Disguised as ChatGPT and Midjourney

- Cybercriminals distribute malware via tools masquerading as known generative AI assistants.

- Fake GenAI apps are spread via misleading ads, phishing sites, and browser extensions.

- Threat actors prefer impersonating ChatGPT and Midjourney above others since these two are extremely popular.

Cybercriminals distribute malware using fake generative AI (GenAI) tools as a lure. These false programs try to impersonate ChatGPT, Midjourney, and other AI assistants and are distributed via fake ads, phishing websites, and Web browser extensions, according to the latest ESET Security report.

Threat actors regularly adapt their payloads to avoid detection by security tools and hide malware behind tools pretending to be ChatGPT, video creator Sora AI, image generator Midjourney, DALL-E, and photo editor Evoto, using the lure of not yet launched versions of these tools, like “ChatGPT 5” or “DALL-E 3.”

Some of these fake tools could even have limited functionality, while others are scams designed to generate revenue for the developer. Cybercriminals could theoretically hide any type of malware in apps and malicious links, including infostealers, ransomware, and remote access Trojans (RATs).

Malware disguised as GenAI apps is mostly spread via phishing sites where victims land after clicking a link on social media (such as a fake Facebook ad) or via a malicious email or message. Over 650,000 attempts to access malicious domains containing “chapgpt” or similar text were seen in the second half of 2023.

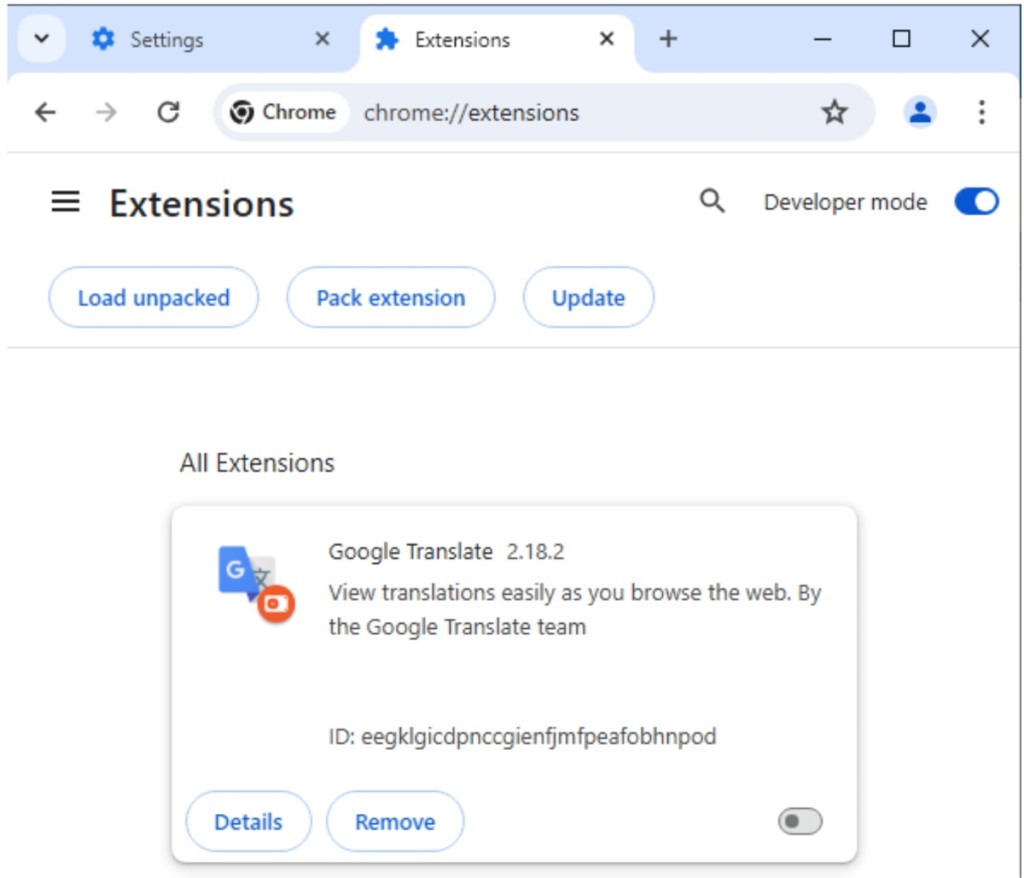

In other cases, Facebook ads for the official website of OpenAI’s Sora or Google’s Gemini actually deployed malicious Web browser extensions masquerading as Google Translate, which had over 4,000 installation attempts.

In reality, it was the Rilide Stealer V4, an infostealer focusing on Facebook credentials, as per ESET’s H1 2024 threat report, which also says the Vidar infostealer installer was seen impersonating Midjourney.

Threat actors also hijacked legitimate accounts to make them seem authentic ChatGPT or other GenAI-branded pages and then ran fake ads via these accounts.