Facebook Is Now Shrinking Fake News To Make Them Less Visible

- Facebook now makes the false news less visible to the users by shrinking them.

- The company uses machine learning to predict false stories.

- This could reduce the spread of false news by 80 percent.

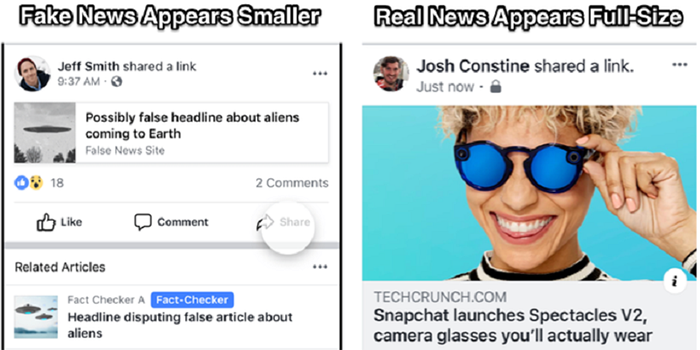

In order to suppress false news spreading around its platform, Facebook has been trying out different approaches. While some ideas like fact-checkers were good, others were a total fiasco like putting red flags on a debunked fake news. As these red flags tend to ignite even more anger among users who believe in these stories, Facebook decided to get rid of them and try out a more subtle approach. On the Fighting Abuse @Scale Event in San Francisco, users were introduced with a more detailed view on this matter. From now on, false news that is debunked will be presented in a smaller link-size manner while true stories that are confirmed by a fact-checker, are going to be presented in a much larger size in the news feed. Bellow the fake news link, related articles are presented which debunk that false story. There is no flagging, just less visibility.

Image Courtesy Of: Tech Crunch

Tech Crunch says that Facebook is using machine learning to predict which stories are false by scanning them immediately after they are uploaded to the platform. The algorithm combines things like user reports and other signals to predict if the story is false. This is being used to speed up the process in a way that news which are predicted as false are prioritized in a queue for fact-checkers. Currently, Facebook uses only 20 fact-checkers from different countries but is looking for more people to partner with.

The company believes that this new approach if implemented correctly, could reduce the spread of the false news by 80 percent. Facebook needs to find the right solution before the next U.S. elections if they want to restore their users' trust. This could also mean that they will be hiring a number of new engineers and content moderators in the nearby future to deal with this problem.