Cloudflare Route Leak Affected 15% of its Global Traffic

- Another BGP route leak causes havoc and global outages after getting propagated extensively.

- The culprit for the route leak seems to be a load balancing and route optimizing software tool.

- The incident shows how a single configuration mistake can lead to widespread internet outages.

Cloudflare issued an incident report yesterday that concerned an extensive BGP route leak that affected 15% of its global traffic. Considering that Cloudflare is used by 16 million websites around the world, this incident impacted internet speeds for everyone, so if you tried to browse any website yesterday, you should have noticed weird outages and slow loading times. The route leak also affected the access to some AWS (Amazon Web Services) servers, and while the issue has now been resolved, the incident highlights the problem with BGP route leaks and the enormous impact that they can have to the internet performance in general.

This is the same thing that happened in November 2018 for Google, and also the same rerouting issue that we reported a couple of weeks ago, both holding China Telecom responsible. BGP (Border Gateway Protocol) hijacking can occur when an ISP (Internet Service Provider) advertises IP addresses that don’t belong to their network, resulting in the routing of foreign traffic through them, which usually results in slower internet. It’s like your traffic data is taking a long way between you and the website server, so timeouts and poor performance are to be expected. These hijacks can happen either intentionally, or due to a misconfiguration.

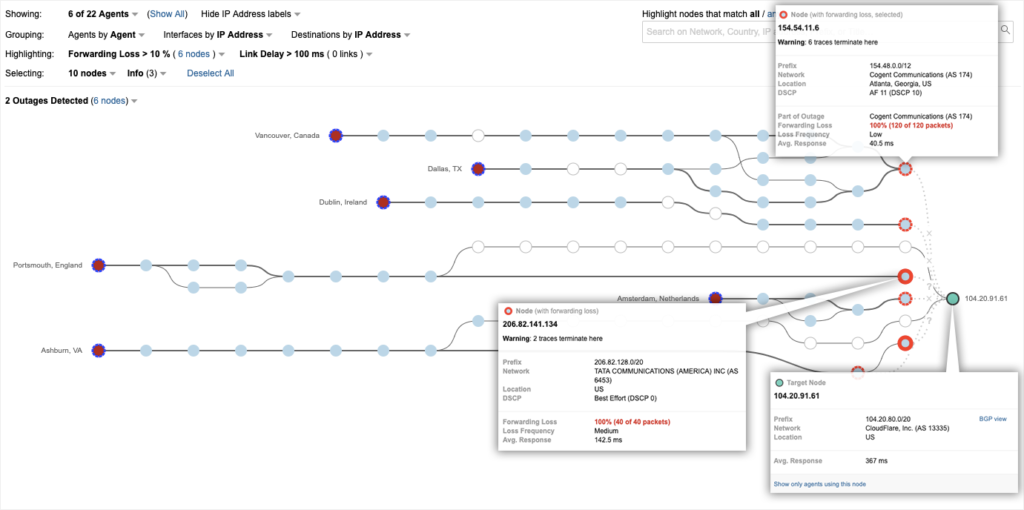

image source: blog.thousandeyes.com

According to a ThousandEyes report, this time Cloudflare’s traffic went through Verizon, with the routing anomaly getting propagated to Qwest (New CenturyLink). Further investigation showed that it all started by a Pennsylvania-based metals company called “Allegheny Technologies” who isn’t even an ISP. The researchers noticed that as the route leak developed, there were dozens of more specific routes that were introduced, capturing traffic from legitimate Cloudflare routes. This is a sign of criminal activity, as advertising more specific routes is practically an escalation method, since the traffic data is leaving the “generic lanes” and enters the more-specific paths for the purpose of data siphoning.

image source: blog.thousandeyes.com

However, using these more-specific routes to pass traffic through a metals company indicates nothing malicious. The official explanation from Cloudflare is that Allegheny’s upstream provider, DQE, used a load balancing software tool that caused the initial route leak, something that the investigation of ThousandEyes confirms, even cautiously. BGP route optimization software can introduce risks like extensive route leaking, so this could be the case here indeed. This would be an isolated incident if Verizon had proper routing safeguards in place that could prevent the acceptance and propagation of these leaks. Instead, they failed to stop the error, and even to respond to Cloudflare in a timely manner.

It’s networking malpractice that the NOC at @verizon has still not replied to messages from other networking teams they impacted, including ours, hours after they mistakenly leaked a large chunk of the Internet’s routing table.

— Matthew Prince 🌥 (@eastdakota) June 24, 2019

Have something to say about the above? Feel free to share your views in the comments down below, or join the discussion on our socials, on Facebook and Twitter.