ChatGPT-4o Flooded with User Images to Generate Studio Ghibli Style Portraits Raising Security Concerns

- Security researchers express concern over the increasing popularity of ChatGPT-4o in generating animation style images

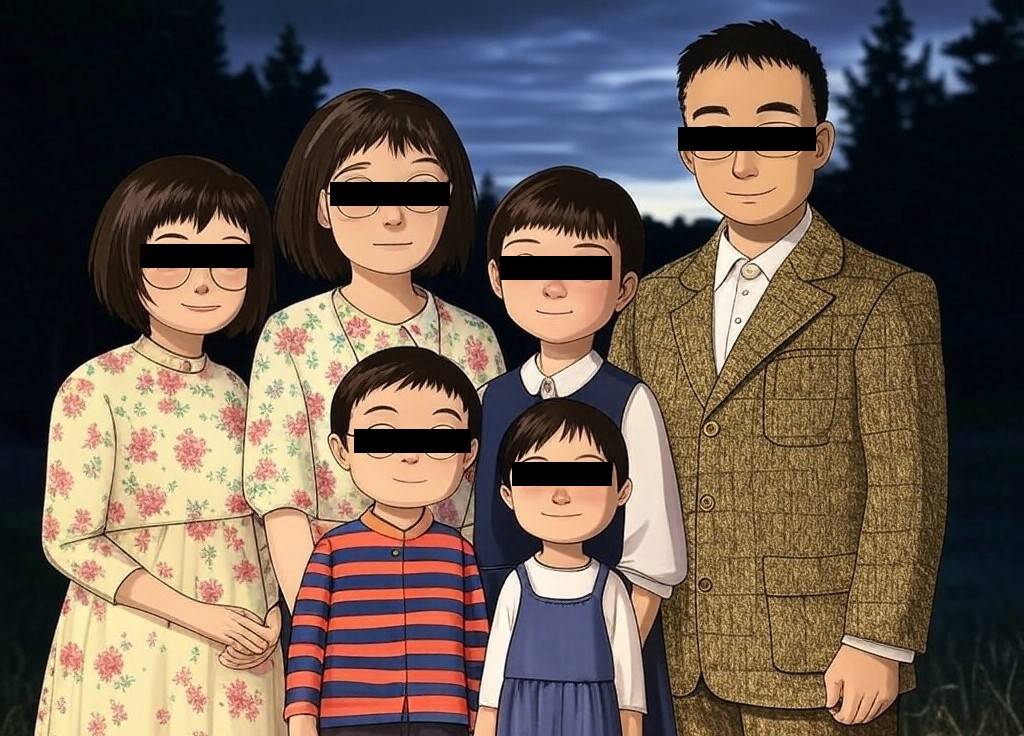

- Users are feeding their photos for Studio Ghibli-inspired images posing a risk to their privacy

- Ghibli founder and Japanese animator Hayao Miyakazi had publicly shared his dislike for AI-generated content

The popularity of ChatGPT’s latest version ‘GPT-4o’ has reached a new high with users on social media posting AI-generated images in Studio Ghibli style. The artwork that resembles the classic Japanese animation studio style has taken the tech work and users by storm.

However, just like any other app that processes data, security researchers have raised concerns over the privacy of information shared by users to generate Ghibli-style images.

The 4o image generation feature accurately processes text prompts and leverages 4os ‘inherent knowledge’ as OpenAI’s website calls it to create an image one wants. User-uploaded images get customized based on commands related to colors, background, and other details.

The question remains if OpenAI asked or was granted permission to train this version with artwork created by Ghibli founder Hayao Miyazaki. Miyazaki has raised his voice on several occasions expressing his disdain in using the quick AI methods to generate animation.

While art and AI models are cherished by users of all ages which is seen in the social media frenzy around ChatGPT-4o’s Ghibli-styled images, several questions remain unanswered related to the consent of the original artist.

In a clip from 2016, Miyazaki was seen calling AI-generated art an ‘insult to life itself.’ Some users have condemned OpenAI for incorporating Ghibli-style image generation capabilities into its tool.

Although users are free to upload their images to see a different version of themselves through an AI model, it is equally important to maintain caution while sharing sensitive or personal photos, especially of others.

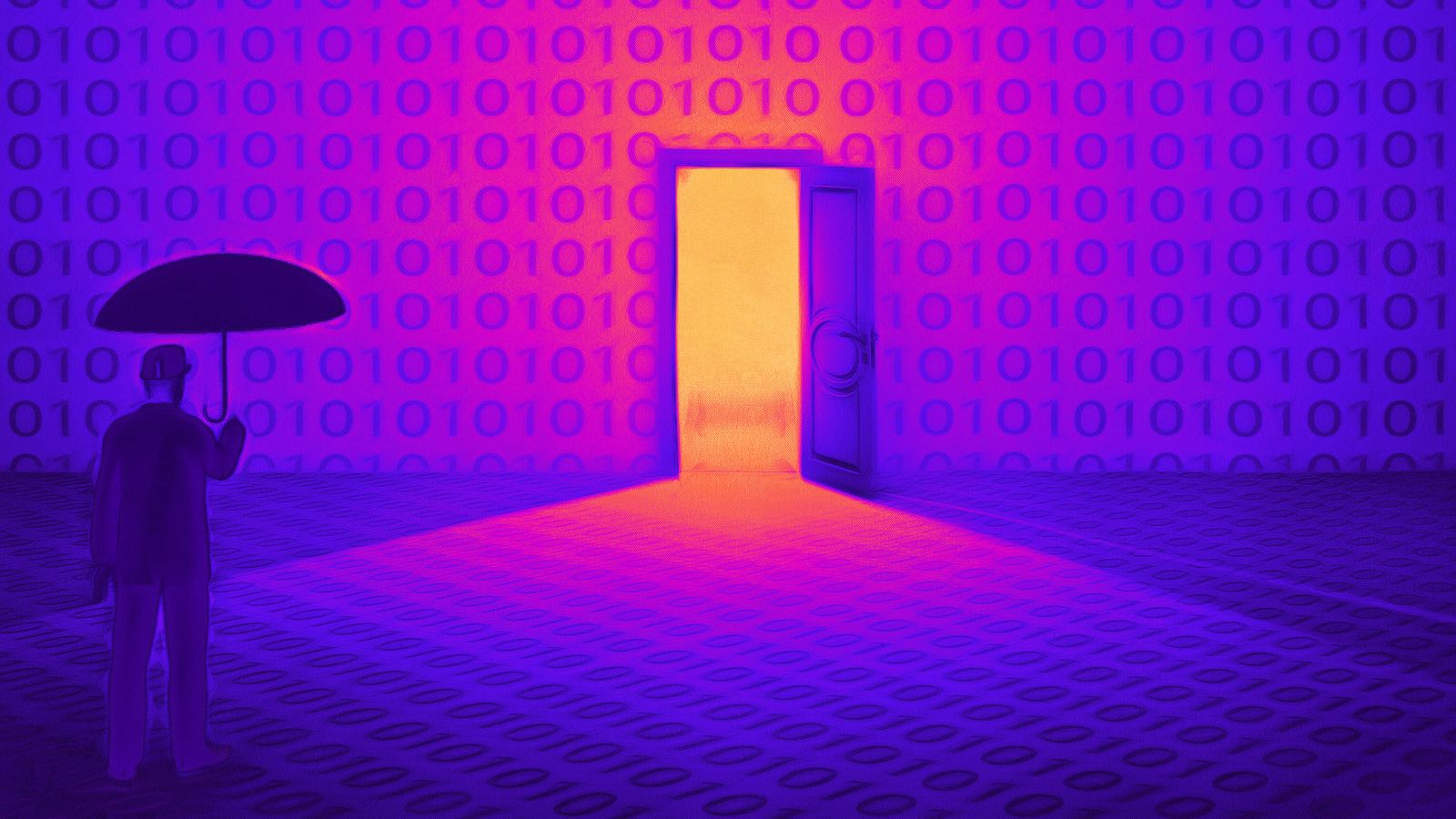

Security measures have been bypassed by threat actors to misuse stored data for unethical purposes. OpenAI has been blocking users and banning ChatGPT accounts used for fraudulent purposes.

Proton, a software company that specializes in VPN, email privacy, and password security among other products added a word of caution for users. They brought up issues arising from data breaches after one has shared personal photos, and how they lose control over the content.

The images may be further exploited for online harassment or extortion. Besides this, companies may also repurpose stored content for training the AI model to enhance and refine the output.