Using Caller ID Spoofing in “Vishing” Attacks Is Becoming a Growing Problem

- Caller ID spoofing that leads to vishing is turning to a growing problem, both in numbers and in success rates.

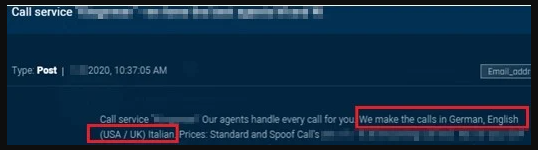

- The actors are turning to specialized platforms that promise to trick the targets in any language.

- Voice synthesizing and AI-based mimicry are entering the game too, so the line between reality and fakery blurs further.

As many recent reports point out, there is a growing problem of malicious actors engaging in caller ID spoofing to trick people through phone phishing, or “vishing.” The FBI has warned about the rising occurrence of this type of scam back in January, with over thirteen thousand people having been confirmed as victims of government impersonation attempts during 2019 alone. Now, SixGill researchers are diving deeper into the phenomenon. They are trying to understand what it is that makes it so lucrative for the actors, as well as so easy for so many of them to carry out.

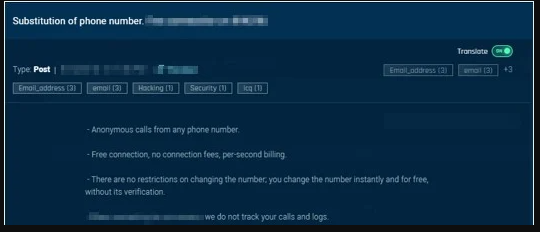

Caller ID spoofing is the imitation of entities by causing the telephone network to indicate an invalid caller to the receiver. It’s basically calling someone hiding behind someone else’s number, so the target sees a number that doesn’t belong to the scammer. Obviously, if you manage to imitate government entities, services, financial institutions, IT support, and help desk service entities, you have a solid base for a successful “vishing” attack. This kind of spoofing can be done through VoIP tools, the “Orange Box” hardware, or by paying specialized service providers to do it for you.

Source: SixGill

Source: SixGill

This last option is the choice that is exploding right now, with many new platforms on the deep and dark web providing this kind of service to people who have no idea how to do it themselves. By having others do the vishing for someone, one is assisted on both a technical and a practical level. Remember, calling takes time, and for many, speaking English isn’t their forte. In general, from what is offered right now, most of the vishing attacks target victims in the United States and the United Kingdom.

Source: SixGill

Finally, to complement the trickery and make it wholly convincing beyond any doubt, some are deploying AI deepfake voice generators that can imitate the voice tone and verbal tinge of a specific person. Of course, these are highly targeted vishing attacks that require some level of preparation, but they are already happening out there. In March 2019, threat actors managed to mimic the voice of the CEO of a German firm, convincing a UK-based subsidiary to transfer $243,000 to a bank account that supposedly belonged to a supplier. This whole voice synthesizing thing may sound too high-tech and still out of reach for many, but it’s perfectly doable by using existing software and AI tools, and it’s only a matter of time before it turns into an extensive problem.