Apple Attempts to Ease Backlash by Giving Away More Details on How the Image Scanning Will Work

- Apple has published more details on how the image scanning tool will work, and in theory, it doesn’t compromise user privacy.

- The system will only compare cryptographic image hashes with those in a database of known child abuse content.

- Craig Federighi says critics have profoundly misunderstood the system because Apple didn’t communicate it right.

Apple’s planned image scanning system (CSAM) that would help detect child abuse material and report it to the authorities to help underage victims has received a lot of criticism and backlash from the community, even from within the company itself. This has forced the company to do something to ease the concerns and extinguish the fire fueled by uncertainty and opaqueness on how the system will work, so they had their software chief, Craig Federighi, come out with some explanations.

In an interview with the Wall Street Journal, the high-ranking executive claimed that critics misunderstood how the child protection features will work and that people’s data remain safe and secure on the Apple platform. Federighi explained that the new system would be limited to image storing in iCloud, so there will be no device or system-wide scanning for people’s images.

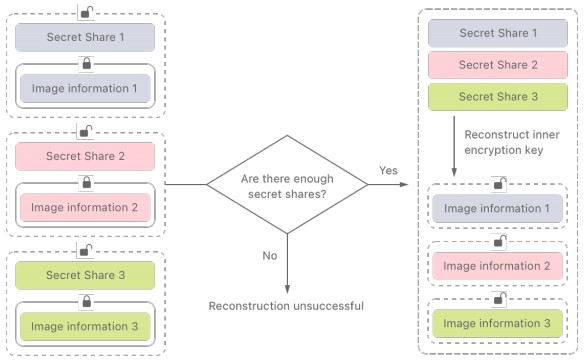

Moreover, the company published a document containing more technical details on how CSAM Detection will work, clarifying that the user images will be compared with sources of published and known child abuse material from various countries, starting from the U.S. National Center for Missing and Exploited Children. Apple will only make the matching through cryptographic image hashes without seeing into the content itself. If more than 30 (rumored initial threshold) image matches are found in a device, the content will go to a human reviewer for evaluation.

Of course, Apple hasn’t admitted that they went through any change of plans or even that they decided to be more transparent due to the pressure from the community, but the week-long calls to reconsider from a very large number of influential people and respected organizations must have certainly played a role. But are these explanations really convincing?

While having a more detailed explanation of how the CSAM Detection will work is a step in the right direction, some risk of breaching people's privacy is inevitably introduced. Having an image evaluation system in place opens up the possibility of abuse, and no matter what safeguards Apple will place, a backdoor is still a backdoor.

In the end, when it comes to closed-source software, it is a question of trust. Federighi said Apple will still deny government requests on user media snooping with the same determination they have demonstrated in the past, and they are adamant in that.