AI-Generated Malvertising ‘White Pages’ Targeting Securitas OneID and Parsec Bypass Detection

- Threat actors use AI-generated “white pages” to skirt security detection systems and regulations.

- These decoy web pages crafted to appear legitimate are employed in malvertising campaigns and go undetected by ad and security verifications.

- While the pages are harmless, they ultimately serve as a launchpad for phishing campaigns.

Artificial intelligence (AI) technologies have enabled cybercriminals to streamline tactics that previously required more effort and expertise. They now use “white pages” in phishing campaigns that target Securitas OneID users and the popular remote desktop program Parsec used by gamers.

White pages is a term used in the criminal underground to describe decoy sites that act as an intermediary layer between malicious content and their targets. These white pages—as opposed to the malicious landing pages called “black pages”—are carefully crafted to appear legitimate and fool both human operators and automated security tools.

Unlike traditional phishing pages that use stock photos or stolen assets, AI-generated white pages allow criminals to produce unique, sophisticated, and even copyright-free content.

From website text to visuals generated through machine learning models (MLLs), these resources are designed to pass through ad and security verifications undetected, as relayed in the latest Malwarebytes security report.

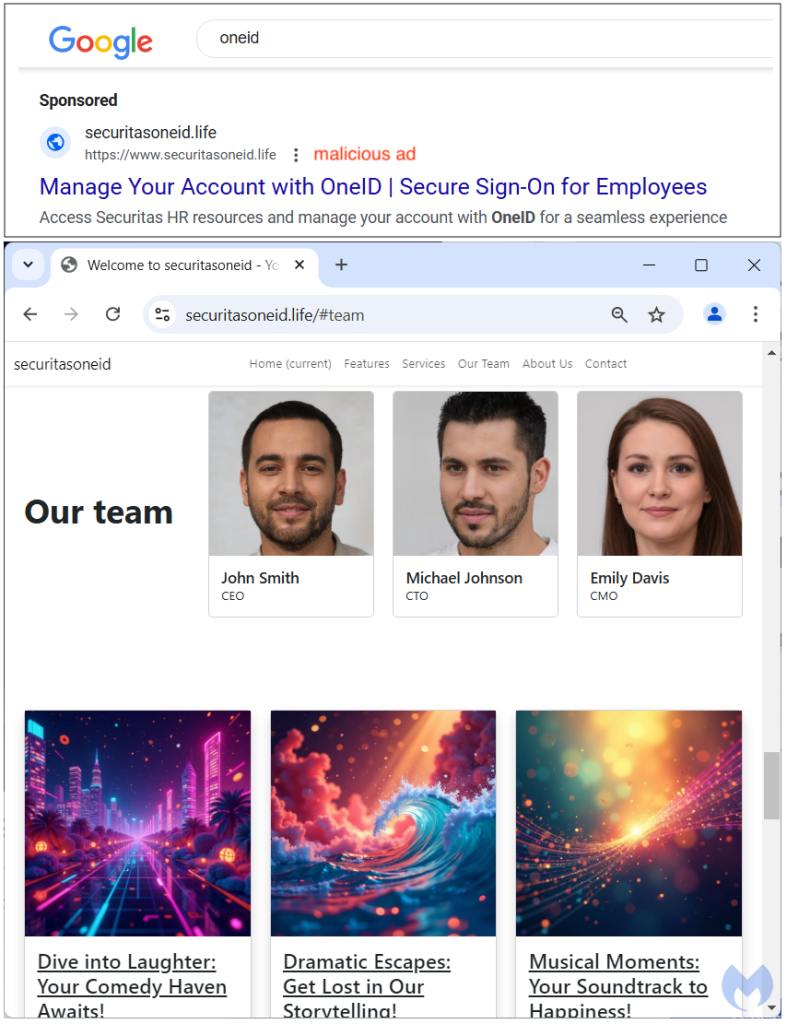

One detailed example involves a phishing campaign targeting Securitas OneID users. The attackers purchase Google Search ads to draw victims in while redirecting most visitors to an innocuous, AI-generated white page that appears unrelated to phishing activities.

The fake webpage is convincingly crafted, with AI-generated profile photos of a fictitious team posing as executives. Previously, cybercriminals would have used stock images or stolen social media photos, but AI now allows them to generate lifelike personas in seconds, bypassing common detection mechanisms.

When Google or another security system checks the ad or hosted page, the white page shows no malicious behavior. This makes it remarkably difficult for automated systems to identify any wrongdoing, allowing the phishing campaign to operate under the radar.

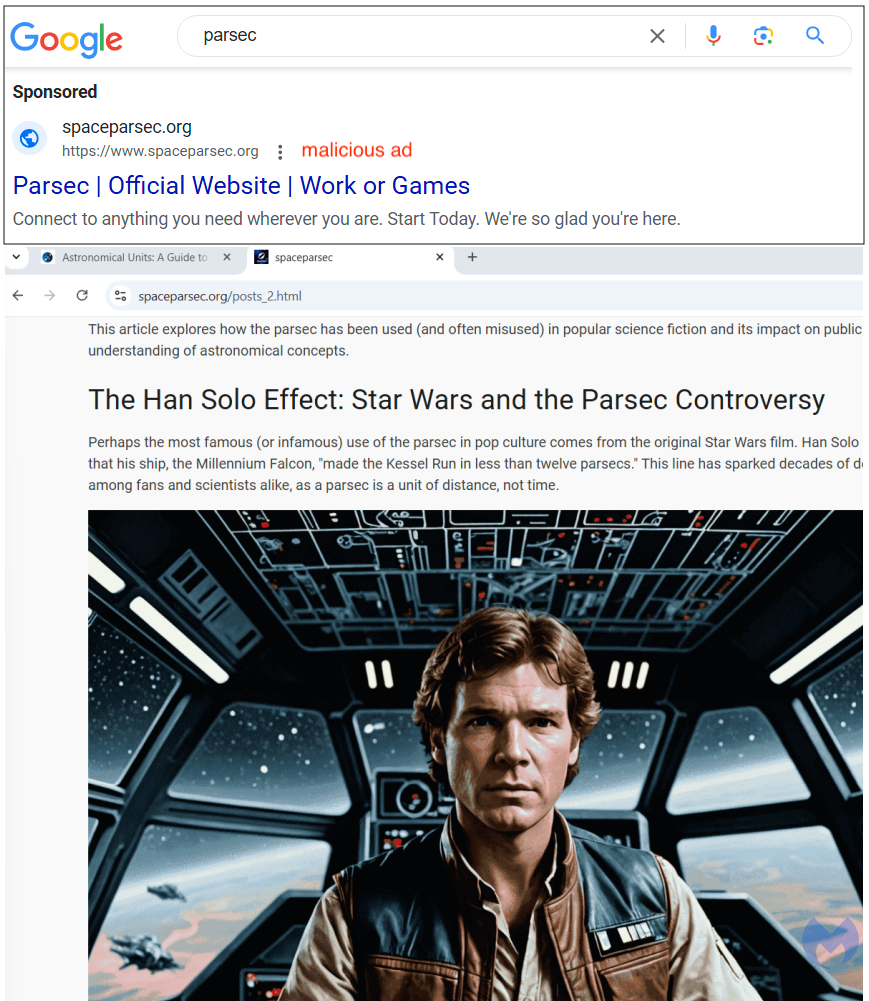

Another revealing incident involves a Google ad for the remote desktop software Parsec. Taking advantage of the dual meaning of the word "parsec"—an astronomical term and its association with Star Wars—the threat actors used AI to craft an elaborate decoy page filled with humorous Star Wars-themed content.

This visually engaging page, featuring AI-generated posters and nods to the franchise, acts as a diversion that misleads detection engines. While the page seems harmless and even amusing, its true aim is to distract from the malicious objectives of the campaign.

Companies are developing AI-driven detection systems designed to recognize AI-generated content and other malicious indicators. However, AI’s ability to generate high-quality content quickly and inexpensively has naturally attracted criminal enterprises eager to exploit its versatility.

In other recent news, a malicious ad campaign was seen targeting Kaiser Permanente employees via Google Search Ads.