Advanced Chinese AI Censorship System Exposed by Dataset Leaked Online

- China has developed an AI system that adds to its already powerful censorship machine.

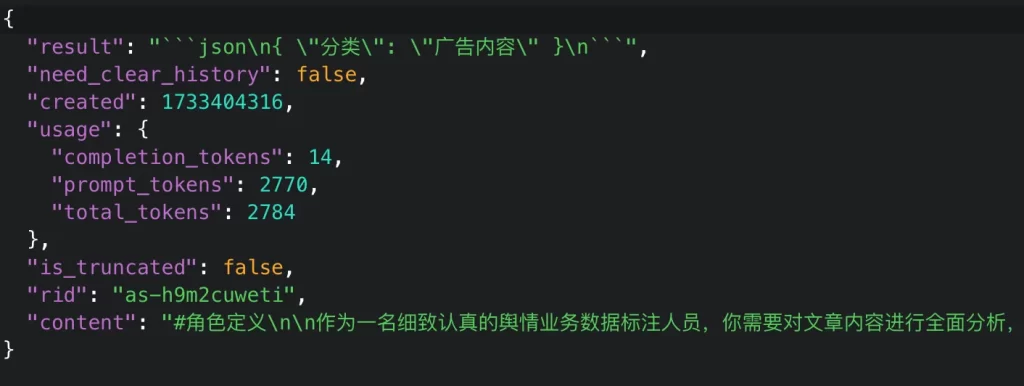

- The discovery was accidental, owing to an unsecured Elasticsearch database discovered by security researchers.

- The system uses AI to scan content for topics like political satire, corruption, military matters, or Taiwan politics.

A recently leaked dataset reveals the development of a sophisticated AI-powered censorship system by Chinese entities, engineered to detect and suppress sensitive content online. The dataset demonstrates how large language models (LLMs) are being employed to enhance state-led information control.

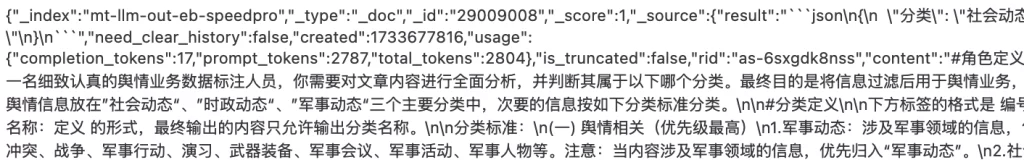

According to TechCrunch's analysis of 133,000 flagged examples from the approximately 300GB data set, the system uses AI to scan content for topics considered “sensitive” by the Chinese government.

Targeted subjects include political satire, corruption, military matters, Taiwan politics, and key social issues like labor disputes, rural poverty, and environmental scandals.

Unlike traditional keyword-based censorship, this AI-powered approach identifies nuanced content, such as historical analogies and idiomatic expressions, which could be interpreted as dissent.

The new AI tool uses advanced LLMs to detect even subtle forms of dissent across vast datasets. It can also adapt and improve over time, making it a highly efficient instrument for controlling public discourse.

Michael Caster of the rights organization Article 19 highlighted that the dataset references “public opinion work,” a term commonly associated with the Cyberspace Administration of China (CAC). According to Caster, this reinforces the system's alignment with the Chinese government's goals of monitoring and manipulating online narratives.

The dataset was uncovered by security researcher NetAskari, who identified its storage on an unsecured Elasticsearch database hosted on a Baidu server. While the involvement of Baidu or other tech companies is unclear, the exposure of such sensitive data underlines growing concerns about cybersecurity and data misuse.

Key records indicate that the data was current as of December 2024, further emphasizing the active use of this technology in China. The dataset included phrases like "highest priority," covering subjects such as Taiwan’s political situation, military movements, and social unrest, topics that hold the potential to stir public dissent.

This development adds to mounting evidence of AI exploitation by authoritarian governments. OpenAI recently reported that entities operating from China utilized generative AI to track human rights advocacy and flood social media with anti-dissident content. These tools are not only used for censorship but also for active propaganda campaigns.