95% of Fake Account Reports on Social Media are Ignored

- A team of researchers figured out that the “big social” is failing hard when it comes to fake content moderation.

- Reported accounts that are obviously fake remain on the platforms even after weeks of having been reported.

- The only way to tackle the problem now is to develop and deploy advanced AI-based systems.

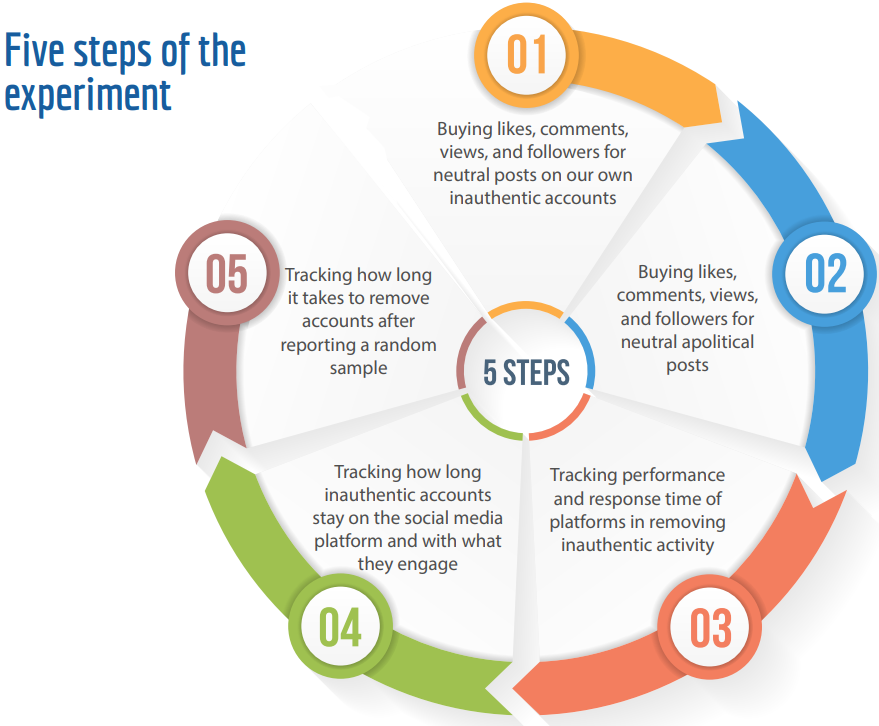

According to a study by Singularex, social media giants are failing to combat inauthentic accounts and fake content online, as the majority of what is reported to them is wholly ignored. The study was conducted by a team of researchers working at the NATO Strategic Communication Centre of Excellence (StratCom) and lasted for four months (May to August 2019). The team members created fake accounts and bought fake likes and shares, and then tried to report the activity onto the platforms to see what happens.

Source: StratCom

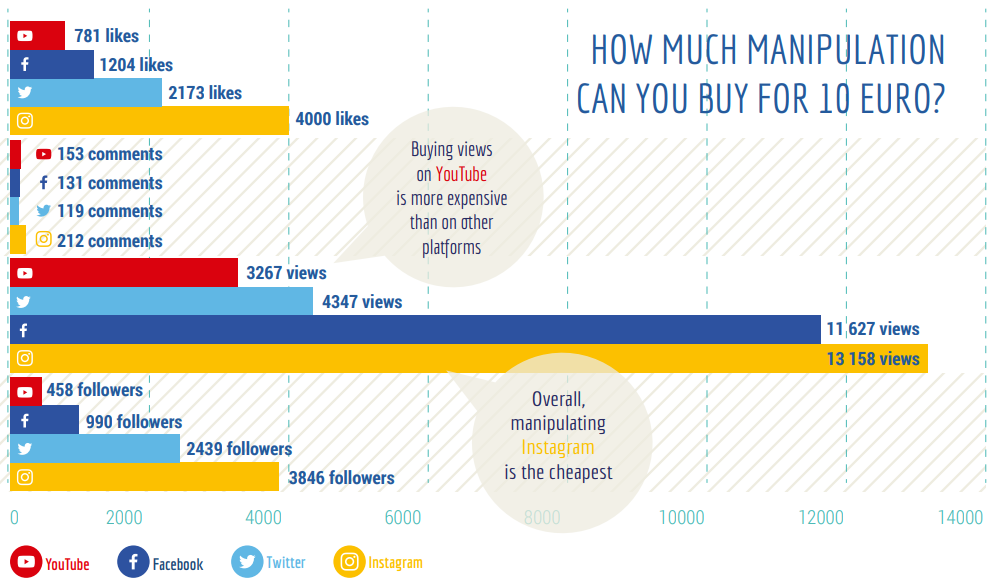

The team spent $332 on Facebook, Instagram, Twitter, and YouTube, and bought thousands of comments, likes, views, and followers. Next, they used these interactions to work backward to many thousands of fake accounts and tried to engage in manipulation. After a full month, more than 80% of all this fake activity remained online, so they tried to report a selection of these fraudulent accounts. After waiting for another three weeks, 95% of the accounts which have been reported remained active. This experiment exposes the inadequacy, inability, and reluctance of social media giants to deal with fake accounts, even when these are pointed to them via reports.

Source: StratCom

The platforms are using excuses that relate to the size of the community, and how even the most sophisticated automated tools cannot tackle the problem. Facebook, for example, has 2.2 billion active users to deal with on the original platform and Instagram, every single day. Naturally, there are trillions of posts that are reported every day, and only a finite number of human reviewers to look into these reports. How effective could this process be really? About 5% effective over the course of three weeks as it seems. Submitting repeated reports does seem to help in some instances, but it won’t make a groundbreaking difference.

So, the only solution right now would be to develop and optimize specialized software and AI-based tools that would be capable of dealing with the humongous volume of the reports. Obviously, employing more personnel is practically impossible, as social media platforms already operate such departments at the largest feasible sizes.

Among the four social media platforms that were tested, Twitter was found to be the most effective in dealing with inauthentic behavior, with 35% of fake accounts getting blocked soon after they have been reported. Similarly, paid content appears a lot later there, the quality of delivery is inferior, and so abuse is harder to achieve.

Do you have anything to comment on the above? Share your thoughts with us in the section down below, or on our socials, on Facebook and Twitter.