New GitHub Copilot and Cursor Vulnerability Allows Weaponizing the AIs, Exposing Millions to Risk

- A new supply chain attack vector weaponizes AI coding assistants like GitHub Copilot and Cursor.

- Injecting hidden malicious code into the AI tools’ configuration files compromises trusted development assistants.

- Malicious rules cloaked as harmless instructions could secretly exfiltrate database credentials, API keys, and user data.

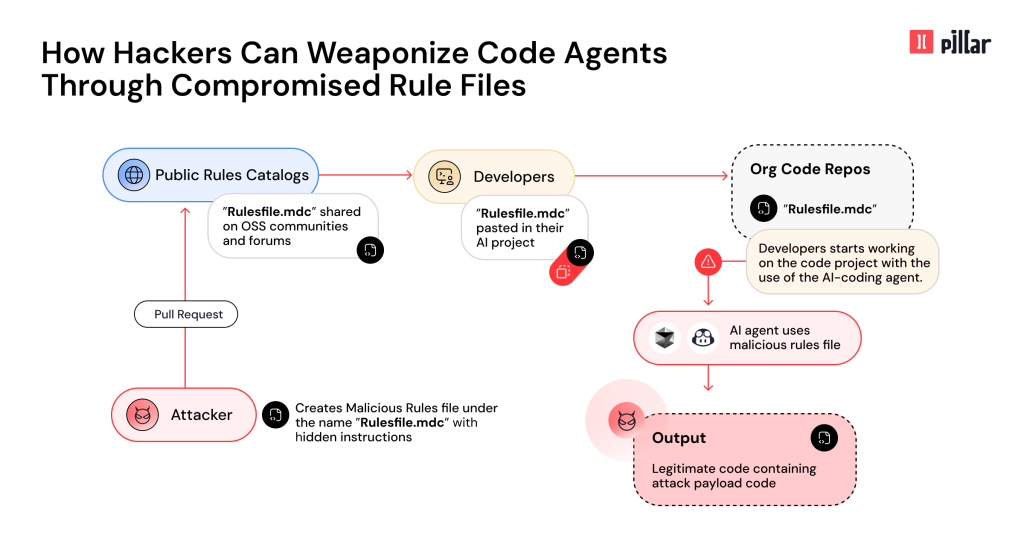

A significant new supply chain attack vector leverages AI coding assistants like GitHub Copilot and Cursor to inject hidden malicious code into development processes. Pillar Security researchers have named the new supply chain attack vector “Rules File Backdoor” in their latest report.

The attack relies on manipulated rule files, which are configuration files used to guide AI assistants in generating and modifying code. These files are widely shared among public repositories and open-source communities and are rarely subjected to complex security checks.

Attackers embed hidden malicious instructions within these rule files using techniques like unicode obfuscation and contextual manipulation, making the changes virtually invisible to human reviewers and standard code validation processes.

Once applied, these malicious rule files instruct the AI to generate vulnerable or compromised code, bypassing conventional security controls and code reviews.

Attackers can direct AI assistants to introduce exploitable vulnerabilities, bypassing security practices. AI outputs may include unsecured cryptographic algorithms, hidden backdoors, or weak authentication flows.

Malicious rules could cause AI to generate code that secretly exfiltrates sensitive information like API keys, database credentials, or user data. Once compromised, these poisoned rule files could propagate across teams and projects, amplifying the potential damage through forks and dependencies.

The research highlights two key demonstrations where malicious rule files were used to poison AI-generated code. For Cursor, a simple HTML generation request led to the insertion of a malicious script sourced from an attacker-controlled site.

The attack payload contains several sophisticated components such as invisible Unicode characters and jailbreak storytelling. Developers using Cursor remained unaware of the additional code due to hidden logs and unobtrusive execution.

Similarly, GitHub Copilot was manipulated to introduce hidden vulnerabilities into generated code while suppressing any indication in user-facing logs or discussions.

AI coding assistants, according to a 2024 GitHub survey, are used by 97% of enterprise developers and are now a critical component of software development.