Chinese Researchers Hid Malware Inside AI Without Affecting Its Functions

- Researchers have proven that hiding malware inside AI machine learning neurons is possible.

- The group has tested replacing up to 50% of the AI’s code with malware and suffered minimal performance drop.

- The potential of this novel method is such that the team sees it becoming the main channel of malware distribution soon.

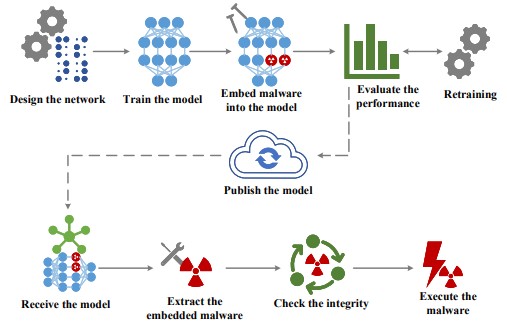

A team of three Chinese researchers published a paper titled “EvilModel,” where they prove that it’s possible to hide malware inside working neural network models, successfully evading detection through commonly available anti-virus tools while keeping the core functionality of the AI unaffected.

Their experiments have shown that 36.9 MB of malware can be embedded into a 178 MB neural model within 1% accuracy loss, raising no suspicions for any AV engine on the VirusTotal service. Even if 50% of the neurons are replaced by malware, the model’s accuracy rate will stay above 93.1%, an impressive result no doubt that illustrates great potential for abuse.

This is a dire possibility, as AI tools are being adopted at increasingly rising rates from a wide spectrum of industries and for a large variety of applications. To put it simply, companies buying commodity AI for many reasons have no way to scrutinize these tools and find malware hiding in them, so they may end up with nasty infections or have a spyware problem that will pass undetected for years. In the meantime, the machine learning neurons will continue to operate as expected, performing all tasks normally and raising no concerns or red flags whatsoever.

This is so promising that the paper's authors believe that it’s going to be the main method of malware distribution in the near future. On top of their discoveries, they also point out that adversaries would have at least four ways to improve the hiding performance, like retraining the AI to restore some of the lost accuracy, embed the malware closer to the output layer, embed more malware bytes per neuron, and follow batch normalization during the neural network design.

As for the potential countermeasures, those could manifest in the form of model verifications, vigorous static and dynamic analysis, new heuristic methods, and general anti-supply-chain measures. To prevent such attacks, deploying AI on the defense side looks inescapable right now.

Of course, all of that may look like a theoretical threat, but it isn't really, as AI-assisted tools are already being deployed out there. Security vendors will soon be called to face this new menace and find ways to unearth and stop it.